Two weeks, I've released the Bash Argsparse 1.7, which brings a few bugfixes, some documentation updates, a function to help describe positional parameters and full nounset compliance.

Along with these changes, I'm happy to announce that the bash argsparse library package has been reviewed and has been accepted for inclusion in the fedora project package repositories. After something like 8 years of inactivity in the fedora project, I'm now a packager anew, and I'm very happy about that. :)

The bash-argsparse package is now available for Fedora 20, Fedora 21, Fedora 22 (alpha) and rawhide. Availability for CentOS 6 and CentOS 7 through the EPEL repositories is just a matter of time. So you may now install bash-argsparse by just using yum or dnf.

Many many thanks to Haïkel Guémar, who reviewed the package and sponsored me as a packager.

The usual links:

* The bash-argsparse-1.7.tar.gz tarball

* The bash-argsparse-1.7-1.fc21.src.rpm Source RPM

* And of course the online documentation.

Tags de l'article : Argsparse, Bash, Fedora, Linux

Tags de l'article : Argsparse, Bash, Fedora, Linux

I've just released the 1.6.1 version of the bash argsparse shell library. There's not much to say about it, there is no API change. It only brings a some fixes for minor issues :

- The library can be re-sourced without error. Actully it will not get re-loaded, but at least it will not trigger errors.

- Another issue has been fixed by dear fellow Florent Usseil making argsparse more compliant with the

nounset

bash option.

- The spec file and the unittest script should now be compliant with the fedora koji build system. If I'm not wrong, the bash-argsparse package should enter the fedora repositories in a few days.

Here are the download links :

Hoping it will be useful to you....

Tags de l'article : Argsparse, Bash, Release

Tags de l'article : Argsparse, Bash, Release

In my previous post, I've described a kinda complicated but command-line based user-level way to mass upload files to ownCloud, using the cadaver WebDAV client.

Well. Erm... There are WebDAV filesystems implementations out there. See ?

$ yum search webdav|grep -i filesystem

davfs2.armv6hl: A filesystem driver for WebDAV

So I did yum install davfs2

-ed, and that just worked. And I've configured davfs2, following a tutorial like these one is fine enough:

Note that disabling locks is indeed required for ownCloud, so I needed to edit davfs2.conf

use_locks 0

After a mount /mnt/owncloud

to locally bind your ownCloud webDAV to a directory, to make recursively upload a full directory tree, it is easy as this:

rsync -vr --progress --bwlimit=123 /path/to/my/directory/. /mnt/owncloud/my_sub_dir/.

In case the rsync

stops in the middle of the upload, the command line can be entered again to resume the upload.

So, no cadaver

, no shell

dark magic, just a mount

and an rsync

.

Easy.

Tags de l'article : Linux, WebDAV, davfs, ownCloud

Tags de l'article : Linux, WebDAV, davfs, ownCloud

So, I've managed to install ownCloud on my Centos with lighttpd.

But now I've got tons of files to push. Like loads of files. With weird file names and everything. OK, maybe not so weird, but anyway, let's see how we can do that.

First, find a comand line utility

Obviously, the first thing, one should consider should be the mirall ownCloud desktop client GUI, It's pretty useful if you have a whole desktop, and GUIs everywhere and if you want to keep the files in sync and all. But right now, all I want to do is a single one shot sync from a headless server, and there a generic ways to upload those files, since ownCloud has a WebDAV interface.

So let's search for webdav clients :

$ sudo yum search webdav | grep client

cadaver.x86_64 : Command-line WebDAV client

neon.x86_64 : An HTTP and WebDAV client library

Something tells me cadaver might be the easiest solution for me, here. Let's go !

yum install cadaver

For the record, cadaver is based on the neon library.

Cadaver uses the ~/.netrc

file to store credentials and avoid having to enter the webDAV username & password every 20 seconds. Its format is explained in the man cadaver

. (For your own sake, I strongly suggest you do NOT google man cadaver

). But anyway, just put those 3 lines in the file.

machine your.owncloud.hostname

username your_username

password your_password

If put correctly, this should now work out of the box:

$ cadaver https://your.owncloud.hostname/owncloud/remote.php/webdav

dav:/owncloud/remote.php/webdav/> ls

Listing collection `/owncloud/remote.php/webdav/': collection is empty.

Well, I have not uploaded anything to my owncloud yet. Let's try to upload something :

dav:/owncloud/remote.php/webdav/> put bash-argsparse-1.6.tar.gz

Uploading bash-argsparse-1.6.tar.gz to `/owncloud/remote.php/webdav/bash-argsparse-1.6.tar.gz':

Progress: [=============================>] 100.0% of 44822 bytes succeeded.

dav:/owncloud/remote.php/webdav/> ls

Listing collection `/owncloud/remote.php/webdav/': succeeded.

bash-argsparse-1.6.tar.gz 44822 Oct 1 13:12

C00l.

OK, but put

only works for a single file. To upload many files, cadaver has an mput

command, but it has 2 annoying limitations:

- you cannot specify the destination directory (webDAV talks about collections, but nevermind that) on the webDAV server

- it is not recursive -_-

The first limitation can easily be worked around by performing a cd

before the mput

. The second one is less trivial to work around be easy enough, if you ask me.

So, considering we want to recursively upload a directory named pics

in the root collection of the webDAV server, proceed in two step :

- first create all the collections,

- then upload all the files.

If you don't want to end up uploading your files in random directories, then you will want to do it in 2 steps. You see, cadaver offers no easy way to control a command has been succesfully performed (or not). So manual check the result after the first step then perform the second one.

Create all the collections

So, first thing first, to create all the collections on the server, using bash4, let's enter the directory where the pics

directory is and run:

shopt -s globstar

dirs=( pics/**/ )

dirs=( "${dirs[@]// /\\ }" )

cadaver https://your.owncloud.hostname/owncloud/remote.php/webdav <<<"mkcol ${dirs[*]}"

It should produce an output like:

dav:/owncloud/remote.php/webdav/> mkcol pics/ pics/Japan/ pics/Japan/After\ Kiyomizu/ pics/Japan/Akiba/ pics/Japan/Ghibli/ pics/Japan/Hakone/ pics/Japan/Heian/ pics/Japan/Hello\ Kitty/ ...

Creating `pics/': succeeded.

Creating `pics/Japan/': succeeded.

Creating `pics/Japan/After Kiyomizu/': succeeded.

Creating `pics/Japan/Akiba/': succeeded.

Creating `pics/Japan/Ghibli/': succeeded.

Creating `pics/Japan/Hakone/': succeeded.

Creating `pics/Japan/Heian/': succeeded.

Creating `pics/Japan/Hello Kitty/': succeeded.

...

dav:/owncloud/remote.php/webdav/>

Connection to `your.owncloud.hostname' closed.

So just check you have everything you need either on the ownCloud web interface, or navigating through the webDAV URL using cadaver command line.

As you might have noticed the only character that has been escaped is the space char. If your directories or files have more funny chars cadaver does not understand as part of the name, just try to escape them with the same method. Avoid external commands if you can.

Now about uploading files

That is s a bit trickier, since regular files needs to be to filtered directories. But using the get_files

function below, it gets a bit easier.

After defining the get_files function, both user and bash users can do this to upload the files:

get_files() {

files=( * )

local i

for i in "${!files[@]}"

do

[[ ! -f ${files[$i]} ]] && unset "files[$i]"

done

}

shopt -s globstar

for dir in pics/**/

do

(

cd "$dir" || exit

get_files

files=( "${files[@]// /\\ }" )

cadaver https://your.owncloud.hostname/owncloud/remote.php/webdav <<EOF

cd ${dir// /\\ }

mput ${files[*]}

EOF

)

done

( Of course, zsh users can just use this instead of the get_files

function:

files=( *(.) )

)

And voila.

And don't forget to clean your mess after the upload and remove the file that contains your ownCloud account password. :-P

rm -v ~/.netrc

Tags de l'article : Linux, WebDAV, cadaver, ownCloud

Tags de l'article : Linux, WebDAV, cadaver, ownCloud

And so, as I was saying, I wanted to setup ownCloud.

For the record, I'm using Centos6, which is a few years old. The EPEL6 repository only provides ownCloud 4.5, so I needed to find owncloud6 somewhere else. So I've looked at:

Anyway, in the owncloud SRPM rebuild creates not only an owncloud-6.0.4-3.el6.noarch.rpm (which contains the ownCloud software itself), but also 3 packages for database backends:

- owncloud-mysql-6.0.4-3.el6.noarch.rpm

- owncloud-postgresql-6.0.4-3.el6.noarch.rpm

- owncloud-sqlite-6.0.4-3.el6.noarch.rpm (I've picked this one, for what it's worth).

There are also 2 additionnal packages which provide owncloud configurations for some webservers:

- owncloud-httpd-6.0.4-3.el6.noarch.rpm (for apache web server)

- owncloud-nginx-6.0.4-3.el6.noarch.rpm (for nginx web server, obviously)

Since I hate configuring apache, and don't really know nginx, I've made my mind for lighttpd. But as you can see, there's no configuration package for it.

Impossible !

, you said ? Don't tell me what I can't do.

First things first: install the packages:

cd ~rpmbuild/RPMS/noarch && yum --enablerepo=remi localinstall owncloud-6.0.4-3.el6.noarch.rpm owncloud-sqlite-6.0.4-3.el6.noarch.rpm

To make things easier for me, I've also installed the owncloud-httpd

package to base my lighttpd configuration upon something known-working. The owncloud-httpd

package provides this file:

Alias /owncloud /usr/share/owncloud

<Directory /usr/share/owncloud/>

Options -Indexes

<IfModule mod_authz_core.c>

# Apache 2.4

Require local

</IfModule>

<IfModule !mod_authz_core.c>

# Apache 2.2

Order Deny,Allow

Deny from all

Allow from 127.0.0.1

Allow from ::1

</IfModule>

ErrorDocument 404 /core/templates/404.php

php_value upload_max_filesize 512M

php_value post_max_size 512M

php_value memory_limit 512M

SetEnv htaccessWorking true

RewriteEngine on

RewriteRule .* - [env=HTTP_AUTHORIZATION:%{HTTP:Authorization},last]

</Directory>

If you read those lines (almost) one by one, you realize it is not that hard to translate them to lighttpd syntax. So considering I've dedicated a virtual host for ownCloud, here is, roughly, what my configuration /etc/lighttpd/vhosts.d/owncloud.conf

file is, with some additional comments just for you to understand it a bit more.

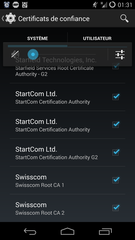

$SERVER["socket"] == ":443" {

ssl.engine = "enable"

# StartSSL CA certificate and intermediate certificate

ssl.ca-file = "/etc/pki/tls/startcom.crt"

# My own certificate, generated by startssl.

ssl.pemfile = "/etc/pki/tls/MY_STARTSSL_CERT.pem"

$HTTP["host"] == "host.domain.tld" {

var.server_name = "host.domain.tld"

# This does not really matter.

server.document-root = "/srv/lighttpd/" + server_name

server.name = server_name

# Bind URL path to filesystem path. (Alias /owncloud /usr/share/owncloud)

alias.url = ( "/owncloud/" => "/usr/share/owncloud/" )

# Disable indexes. (Options -Indexes)

$HTTP["url"] =~ "^/owncloud($|/)" {

dir-listing.activate = "disable"

}

$HTTP["url"] =~ "^/owncloud/data/" {

url.access-deny = ("")

}

# Redirect / to /owncloud/

url.redirect = (

"^/(index.php|owncloud)?$" => "https://" + server_name + "/owncloud/"

)

# Treat *everything* under remote.php as php. Not an option.

$HTTP["url"] =~ "^/owncloud/remote.php/.*" {

fastcgi.map-extensions = ( "" => ".php" )

}

# Owncloud 404 page (ErrorDocument 404 /core/templates/404.php)

server.error-handler-404 = "/owncloud/core/templates/404.php"

# It looks like ownCloud also has a 403 handler.

server.error-handler-403 = "/owncloud/core/templates/403.php"

# Specific logfile

accesslog.filename = log_root + "/" + server_name + "/access.log"

}

}

This configuration file does not include the php_value

directives implementation. This means that if you do not do anything about it, you won't be able to upload more than 2MB files. Out of laziness, I've decided to change the system-wide /etc/php.ini

. The changes are pretty straightforward, so I won't talk more about them here.

For the record, the $HTTP["url"] =~ "^/owncloud/remote.php/.*" { fastcgi.map-extensions = ( "" => ".php" ) }

blob is a fix for the (in)famous "Why, Ô, why the hell files ending with a 0 in their name fail to upload ???" bug. It was... tricky... to track, but the fix makes sense once you understand how lighty internally works. This bug will happen more than you think: the ownCloud android app splits big (as in "more than 10MiB") files... and the first chunk for a big foo

file, will be foo-0

, which will trigger that bug.

The last thing you are required to perform is to change ownership of some writable files for lighttpd.

chown -R lighttpd:lighttpd /etc/owncloud /var/lib/owncloud

Unfortunately, this will be required every time you update the owncloud packages.

After tweaking php and restarting lighttpd, and provided that you have correctly made some generic configuration in lighttpd for php, https, to allow redirections, etc. you should now be able to:

- Create and share contact

- Use calendars

- Upload up-to-512MB files through the web interface.

It should be noted, that I've installed my ownCloud 6 instance in the very last days prior to the ownCloud 7 release. So, early September, I've picked the owncloud-7.0.2-2.fc20 SRPM from fedora koji build platform and rebuilt it for my Centos release. The package rebuild, package update (+ that chown

thingy -_-) and internal ownCloud upgrade went without any problem. The lighttpd configuration itself did not require any further tweaking.

OwnCloud 7, beside the overall general improvement, fixes a few issues I had to patch up manually (most of fixes are already reported in the github) in ownCloud 6, so I strongly suggest upgrading to version 7. I still have some minor bugs in the contact app (categories don't seem to be correctly set all the time), ...

But honestly, for now...

It just works.

Tags de l'article : Centos, Fedora, Lighttpd, Linux, ownCloud

Tags de l'article : Centos, Fedora, Lighttpd, Linux, ownCloud

When you're about to setup an your own owncloud, and when you know you will be using your owncloud mainly through a GSM network, the first thing you need is an SSL certificate, so that regular users wont be able to spy on your communications.

The first option I had in mind was to create a stupid self-signed certificate.

And I did it.

It worked.

Honest.

But problem is, when you do that, you have to import the certificate in all the browsers and client you're about to use... Plus, android was sending me warnings all the time after I imported the self-signed Authority Certificate "be careful, network is |ns3cur3, d00d".

So my second option was to obtain a trusted certificate, signed by a trusted authorized. And honestly I did not want to spend money on it. It was for my own personnal usage, for the gods sake. So after a few research, I found 3 authorities doing this :

While Gandi can actually provides a one-time 1-year SSL certificate, and while cacert is not recognized by Android, http://startssl.com can actually offer you a 1-year SSL certificate. For free. And when the certificate expires, you can just create another one. And, cherry on the top, startssl is a trusted authority on android.

There are some limitations, of course. But for a single stupid https vhost it was enough.

And voila.

Tags de l'article : Lighttpd, SSL, https

Tags de l'article : Lighttpd, SSL, https

.... And I wanted it to be google-free.

So I've been adviced to use owncloud.... And I wanted it to run on lighttpd.

So I've looked for help on the net... And it seemed nobody ever publicly did that.

So I've managed to make it work... And it was painful.

So a friend of mine told me I should publish my configuration... And I agreed, but i had no website to publish it on...

So now, I got myself a blog.... And here you are.

Laugh. -_-

Tags de l'article : TheEnd

Tags de l'article : TheEnd

2015-03-19 - No comments

2015-03-19 - No comments